Prediction Demystified

Jed Ludlow

jedludlow.com

%matplotlib inline

import matplotlib.pyplot as plt

import seaborn as sns

import pandas as pd

import time

Setup seaborn to use slightly larger fonts

sns.set_context("talk")

Objectives

New to Prediction?

- Understand the essential terminology and concepts.

- Get ideas about where to go next.

Experienced Data Scientist?

- A few philosophical things to consider.

- Implications of machine learning from an engineering perspective.

Data Science

- A data scientist is a statistician who lives in San Francisco.

- Data science is statistics on a Mac.

- A data scientist is someone who is better at statistics than any software engineer and better at software engineering than any statistician.

(from https://twitter.com/jeremyjarvis/status/428848527226437632/photo/1)

The Original Data Scientist

W. Edwards Deming

(source: Wikipedia)

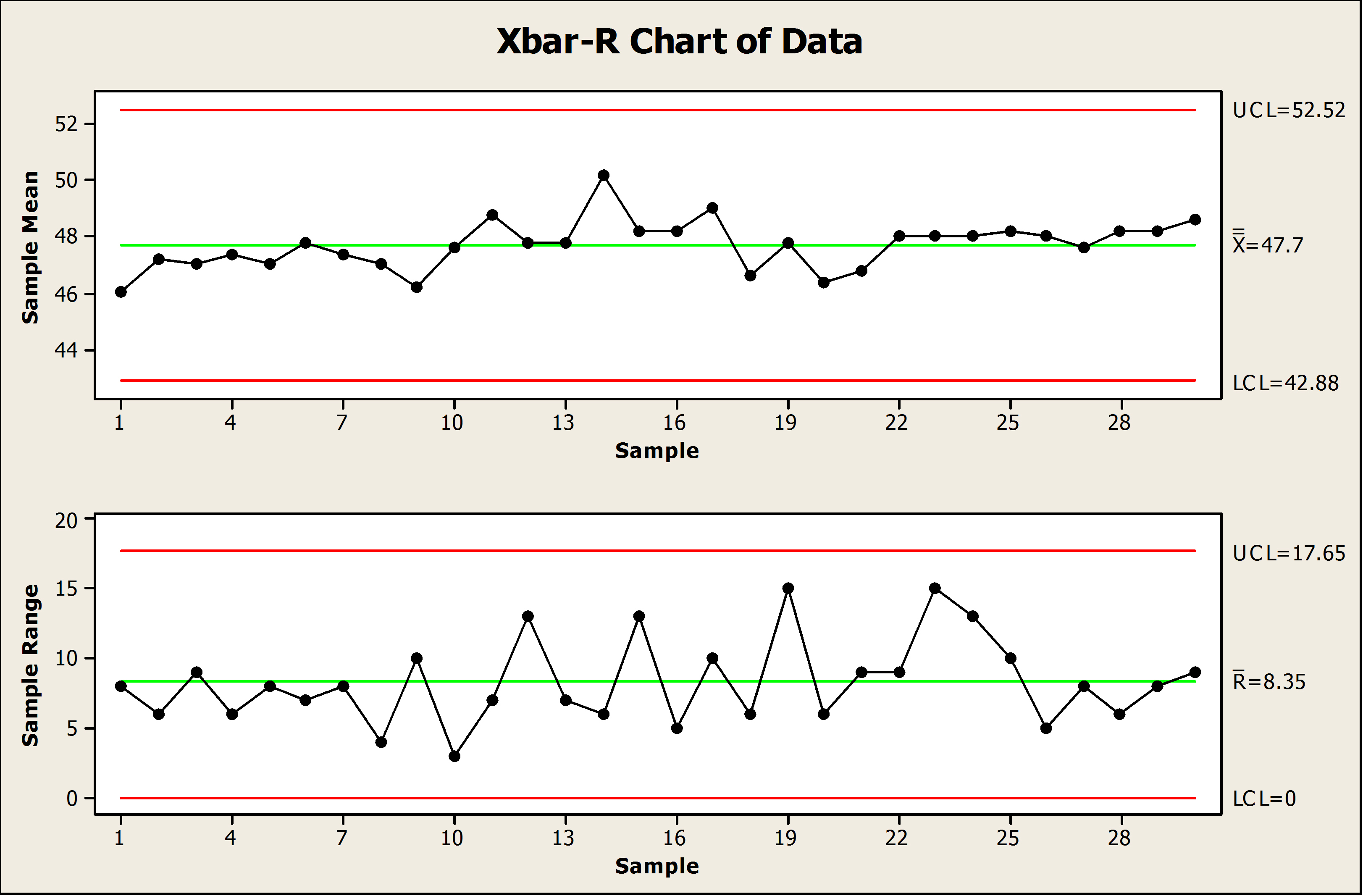

Control Charting is Prediction

(source: http://blog.minitab.com/blog/michelle-paret/control-charts-subgroup-size-matters)

"Hello, World" Prediction Problem: Concept

Predict the output variables (height) as a function of the input features (age). Accomplish this by fitting a model (linear regression) to the available samples (five boys) in the training data set.

"Hello, World" Prediction Problem: Python

import numpy as np

from sklearn.linear_model import LinearRegression

# Training output samples

height_inches = np.array([[51.0, 56.0, 64.0, 71.0, 69.0]]).T

# Training feature samples

age_years = np.array([[7.8, 10.7, 13.7, 17.5, 20.1]]).T

# Initialize model

model = LinearRegression()

# Train

model.fit(age_years, height_inches)

# Predict

model.predict(15.0)

array([[ 63.90023465]])

def plot_boys(age, height, test=None, pred=None):

plt.plot(age_years, height_inches, marker='o', ls='none')

plt.xlabel("Age (years)")

plt.ylabel("Height (inches)")

plt.title("Boys")

if test is not None:

plt.plot(test, pred, '-');

plot_boys(age_years, height_inches);

test = np.array([np.linspace(7.0, 21.0)]).T

pred = model.predict(test)

plot_boys(age_years, height_inches, test, pred)

test = np.array([np.linspace(0.0, 50.0)]).T

pred = model.predict(test)

plot_boys(age_years, height_inches, test, pred)

Tall babies! Really tall adults!

from sklearn.preprocessing import PolynomialFeatures

pf = PolynomialFeatures(4)

x_poly = pf.fit_transform(age_years)

model.fit(x_poly, height_inches)

test = np.array([np.linspace(7.0, 21.0)]).T

test_poly = pf.transform(test)

pred = model.predict(test_poly)

plot_boys(age_years, height_inches, test, pred)

Look! No training error!

test = np.array([np.linspace(0.0, 50.0)]).T

test_poly = pf.transform(test)

pred = model.predict(test_poly)

plot_boys(age_years, height_inches, test, pred)

Really tall babies! "Unphysical" heights for adults!

Skillful Prediction

"I've beat this drum a hundred times, but what statistical models should do is to predict what will happen, given or conditioned on the data which came before and the premises which led to the particular model used. Then, since we have a prediction, we wait for confirmatory, never-observed-before data. If the model was good, we will have skillful predictions. If not, we start over."

-William M. Briggs, Statistician to the Stars!

(source: http://wmbriggs.com/post/14532)

Lessons from "Hello, World" Prediction Problem

- Skillful prediction cannot be measured against the training data set. We must test against data not included in the training.

- Reducing the training error is not necessarily the primary objective of model development.

- A flexible model (polynomial regression) is not always better than an inflexible model (linear regression).

- Extrapolation beyond the bounds of the training data can produce unexpected results.

Kaggle: Predicting Survival on the Titanic

- An introductory competition for knowledge sharing.

- Fully worked examples in Excel, Python, and R.

- Upload your solution to Kaggle for scoring.

- A great first example for a classification problem.

Machine Learning Toolboxes

- Very popular open source packages available in many programming languages.

- R

- Python

- Growing number of "Machine Learning as a Service" companies.

- Big companies offering prediction APIs hosted in the cloud.

- Microsoft Azure Machine Learning

- Google Prediction API

import csv

dtypes = {

"PassengerId":np.int64,

"Survived":object,

"Pclass":np.int64,

"Name":object,

"Sex":object,

"Age":np.float64,

}

Titanic Training Set

train_df = pd.read_csv("train.csv", dtype=dtypes)

train_df

| PassengerId | Survived | Pclass | Name | Sex | Age | SibSp | Parch | Ticket | Fare | Cabin | Embarked | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 0 | 3 | Braund, Mr. Owen Harris | male | 22 | 1 | 0 | A/5 21171 | 7.2500 | NaN | S |

| 1 | 2 | 1 | 1 | Cumings, Mrs. John Bradley (Florence Briggs Th... | female | 38 | 1 | 0 | PC 17599 | 71.2833 | C85 | C |

| 2 | 3 | 1 | 3 | Heikkinen, Miss. Laina | female | 26 | 0 | 0 | STON/O2. 3101282 | 7.9250 | NaN | S |

| 3 | 4 | 1 | 1 | Futrelle, Mrs. Jacques Heath (Lily May Peel) | female | 35 | 1 | 0 | 113803 | 53.1000 | C123 | S |

| 4 | 5 | 0 | 3 | Allen, Mr. William Henry | male | 35 | 0 | 0 | 373450 | 8.0500 | NaN | S |

| 5 | 6 | 0 | 3 | Moran, Mr. James | male | NaN | 0 | 0 | 330877 | 8.4583 | NaN | Q |

| 6 | 7 | 0 | 1 | McCarthy, Mr. Timothy J | male | 54 | 0 | 0 | 17463 | 51.8625 | E46 | S |

| 7 | 8 | 0 | 3 | Palsson, Master. Gosta Leonard | male | 2 | 3 | 1 | 349909 | 21.0750 | NaN | S |

| 8 | 9 | 1 | 3 | Johnson, Mrs. Oscar W (Elisabeth Vilhelmina Berg) | female | 27 | 0 | 2 | 347742 | 11.1333 | NaN | S |

| 9 | 10 | 1 | 2 | Nasser, Mrs. Nicholas (Adele Achem) | female | 14 | 1 | 0 | 237736 | 30.0708 | NaN | C |

| 10 | 11 | 1 | 3 | Sandstrom, Miss. Marguerite Rut | female | 4 | 1 | 1 | PP 9549 | 16.7000 | G6 | S |

| 11 | 12 | 1 | 1 | Bonnell, Miss. Elizabeth | female | 58 | 0 | 0 | 113783 | 26.5500 | C103 | S |

| 12 | 13 | 0 | 3 | Saundercock, Mr. William Henry | male | 20 | 0 | 0 | A/5. 2151 | 8.0500 | NaN | S |

| 13 | 14 | 0 | 3 | Andersson, Mr. Anders Johan | male | 39 | 1 | 5 | 347082 | 31.2750 | NaN | S |

| 14 | 15 | 0 | 3 | Vestrom, Miss. Hulda Amanda Adolfina | female | 14 | 0 | 0 | 350406 | 7.8542 | NaN | S |

| 15 | 16 | 1 | 2 | Hewlett, Mrs. (Mary D Kingcome) | female | 55 | 0 | 0 | 248706 | 16.0000 | NaN | S |

| 16 | 17 | 0 | 3 | Rice, Master. Eugene | male | 2 | 4 | 1 | 382652 | 29.1250 | NaN | Q |

| 17 | 18 | 1 | 2 | Williams, Mr. Charles Eugene | male | NaN | 0 | 0 | 244373 | 13.0000 | NaN | S |

| 18 | 19 | 0 | 3 | Vander Planke, Mrs. Julius (Emelia Maria Vande... | female | 31 | 1 | 0 | 345763 | 18.0000 | NaN | S |

| 19 | 20 | 1 | 3 | Masselmani, Mrs. Fatima | female | NaN | 0 | 0 | 2649 | 7.2250 | NaN | C |

| 20 | 21 | 0 | 2 | Fynney, Mr. Joseph J | male | 35 | 0 | 0 | 239865 | 26.0000 | NaN | S |

| 21 | 22 | 1 | 2 | Beesley, Mr. Lawrence | male | 34 | 0 | 0 | 248698 | 13.0000 | D56 | S |

| 22 | 23 | 1 | 3 | McGowan, Miss. Anna "Annie" | female | 15 | 0 | 0 | 330923 | 8.0292 | NaN | Q |

| 23 | 24 | 1 | 1 | Sloper, Mr. William Thompson | male | 28 | 0 | 0 | 113788 | 35.5000 | A6 | S |

| 24 | 25 | 0 | 3 | Palsson, Miss. Torborg Danira | female | 8 | 3 | 1 | 349909 | 21.0750 | NaN | S |

| 25 | 26 | 1 | 3 | Asplund, Mrs. Carl Oscar (Selma Augusta Emilia... | female | 38 | 1 | 5 | 347077 | 31.3875 | NaN | S |

| 26 | 27 | 0 | 3 | Emir, Mr. Farred Chehab | male | NaN | 0 | 0 | 2631 | 7.2250 | NaN | C |

| 27 | 28 | 0 | 1 | Fortune, Mr. Charles Alexander | male | 19 | 3 | 2 | 19950 | 263.0000 | C23 C25 C27 | S |

| 28 | 29 | 1 | 3 | O'Dwyer, Miss. Ellen "Nellie" | female | NaN | 0 | 0 | 330959 | 7.8792 | NaN | Q |

| 29 | 30 | 0 | 3 | Todoroff, Mr. Lalio | male | NaN | 0 | 0 | 349216 | 7.8958 | NaN | S |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 861 | 862 | 0 | 2 | Giles, Mr. Frederick Edward | male | 21 | 1 | 0 | 28134 | 11.5000 | NaN | S |

| 862 | 863 | 1 | 1 | Swift, Mrs. Frederick Joel (Margaret Welles Ba... | female | 48 | 0 | 0 | 17466 | 25.9292 | D17 | S |

| 863 | 864 | 0 | 3 | Sage, Miss. Dorothy Edith "Dolly" | female | NaN | 8 | 2 | CA. 2343 | 69.5500 | NaN | S |

| 864 | 865 | 0 | 2 | Gill, Mr. John William | male | 24 | 0 | 0 | 233866 | 13.0000 | NaN | S |

| 865 | 866 | 1 | 2 | Bystrom, Mrs. (Karolina) | female | 42 | 0 | 0 | 236852 | 13.0000 | NaN | S |

| 866 | 867 | 1 | 2 | Duran y More, Miss. Asuncion | female | 27 | 1 | 0 | SC/PARIS 2149 | 13.8583 | NaN | C |

| 867 | 868 | 0 | 1 | Roebling, Mr. Washington Augustus II | male | 31 | 0 | 0 | PC 17590 | 50.4958 | A24 | S |

| 868 | 869 | 0 | 3 | van Melkebeke, Mr. Philemon | male | NaN | 0 | 0 | 345777 | 9.5000 | NaN | S |

| 869 | 870 | 1 | 3 | Johnson, Master. Harold Theodor | male | 4 | 1 | 1 | 347742 | 11.1333 | NaN | S |

| 870 | 871 | 0 | 3 | Balkic, Mr. Cerin | male | 26 | 0 | 0 | 349248 | 7.8958 | NaN | S |

| 871 | 872 | 1 | 1 | Beckwith, Mrs. Richard Leonard (Sallie Monypeny) | female | 47 | 1 | 1 | 11751 | 52.5542 | D35 | S |

| 872 | 873 | 0 | 1 | Carlsson, Mr. Frans Olof | male | 33 | 0 | 0 | 695 | 5.0000 | B51 B53 B55 | S |

| 873 | 874 | 0 | 3 | Vander Cruyssen, Mr. Victor | male | 47 | 0 | 0 | 345765 | 9.0000 | NaN | S |

| 874 | 875 | 1 | 2 | Abelson, Mrs. Samuel (Hannah Wizosky) | female | 28 | 1 | 0 | P/PP 3381 | 24.0000 | NaN | C |

| 875 | 876 | 1 | 3 | Najib, Miss. Adele Kiamie "Jane" | female | 15 | 0 | 0 | 2667 | 7.2250 | NaN | C |

| 876 | 877 | 0 | 3 | Gustafsson, Mr. Alfred Ossian | male | 20 | 0 | 0 | 7534 | 9.8458 | NaN | S |

| 877 | 878 | 0 | 3 | Petroff, Mr. Nedelio | male | 19 | 0 | 0 | 349212 | 7.8958 | NaN | S |

| 878 | 879 | 0 | 3 | Laleff, Mr. Kristo | male | NaN | 0 | 0 | 349217 | 7.8958 | NaN | S |

| 879 | 880 | 1 | 1 | Potter, Mrs. Thomas Jr (Lily Alexenia Wilson) | female | 56 | 0 | 1 | 11767 | 83.1583 | C50 | C |

| 880 | 881 | 1 | 2 | Shelley, Mrs. William (Imanita Parrish Hall) | female | 25 | 0 | 1 | 230433 | 26.0000 | NaN | S |

| 881 | 882 | 0 | 3 | Markun, Mr. Johann | male | 33 | 0 | 0 | 349257 | 7.8958 | NaN | S |

| 882 | 883 | 0 | 3 | Dahlberg, Miss. Gerda Ulrika | female | 22 | 0 | 0 | 7552 | 10.5167 | NaN | S |

| 883 | 884 | 0 | 2 | Banfield, Mr. Frederick James | male | 28 | 0 | 0 | C.A./SOTON 34068 | 10.5000 | NaN | S |

| 884 | 885 | 0 | 3 | Sutehall, Mr. Henry Jr | male | 25 | 0 | 0 | SOTON/OQ 392076 | 7.0500 | NaN | S |

| 885 | 886 | 0 | 3 | Rice, Mrs. William (Margaret Norton) | female | 39 | 0 | 5 | 382652 | 29.1250 | NaN | Q |

| 886 | 887 | 0 | 2 | Montvila, Rev. Juozas | male | 27 | 0 | 0 | 211536 | 13.0000 | NaN | S |

| 887 | 888 | 1 | 1 | Graham, Miss. Margaret Edith | female | 19 | 0 | 0 | 112053 | 30.0000 | B42 | S |

| 888 | 889 | 0 | 3 | Johnston, Miss. Catherine Helen "Carrie" | female | NaN | 1 | 2 | W./C. 6607 | 23.4500 | NaN | S |

| 889 | 890 | 1 | 1 | Behr, Mr. Karl Howell | male | 26 | 0 | 0 | 111369 | 30.0000 | C148 | C |

| 890 | 891 | 0 | 3 | Dooley, Mr. Patrick | male | 32 | 0 | 0 | 370376 | 7.7500 | NaN | Q |

891 rows × 12 columns

import googleprediction

model = googleprediction.GooglePredictor(

"eternal-photon-824",

"hello-world-red-leader/train_cleaned.csv",

"hastalapasta",

"client_secrets.json")

Prediction with Google Prediction API

- Initiate a project through Google Developers Console.

- Enable prediction API and cloud storage for that project.

- Upload training data (specially formatted CSV file) to the storage bucket.

- Install Google API Python Client.

- Get OAuth 2.0 authentication flow working. (Yes, really.)

- Call

insertwith appropriately formatted JSON to configure and train the model. - Call

predictwith similarly formatted JSON to make predictions. - Pay for training and prediction usage. (Free 60-day trial available.)

Luckily, there are good examples here: https://github.com/google/google-api-python-client

def survived(pred):

pred = pred[0]

if pred == u'1':

print "YES"

else:

print "NO"

Titanic Survival Model Using Google Prediction

- Fit a model to the Kaggle Titanic survival training set after some data cleaning.

- Model is live on Google's cloud services.

- Let's check training error by predicting back against a known survivor from the training set.

pred = model.predict([[

'1', # Fare class

'Spencer Mrs William Augustus Marie Eugenie', # Name

'female', # Gender

20.2, # Age

1, # Number of parents or children aboard

0, # Number of siblings or spouse aboard

146.5208, # Fare price

],])

survived(pred)

YES

We could now make predictions for all the passengers in the test set and upload our solution to Kaggle for scoring, but it might be more fun....

pred = model.predict([[

'1', # Fare class

'Frank Lampard', # Name

'male', # Gender

36.0, # Age

0, # Number of parents or children aboard

0, # Number of siblings or spouse aboard

20.0, # Fare price

],])

survived(pred)

NO

pred = model.predict([[

'1', # Fare class

'Frank Lampard', # Name

'male', # Gender

36.0, # Age

0, # Number of parents or children aboard

0, # Number of siblings or spouse aboard

500.0, # Fare price

],])

survived(pred)

YES

Lessons from Titanic Survival Classifier

- Real data is almost never tidy. Some cleaning is required.

- Some model types are by nature black boxes that cannot be inspected on the inside. You might be able to discern some things about how the model is behaving by feeding it interesting test cases, but that's not the same as having full access to the model internals.

- Choose the machine learning toolbox to match the modeling need and the implementation plan.

Deep Learning

"Deep architectures are composed of multiple levels of non-linear operations, such as in neural nets with many hidden layers..."

-Yoshua Bengio, Learning Deep Architectures for AI

- Input feature vectors are very large. Thousands of features are common.

- The model is very flexible, sometimes employing millions of parameters.

- Training algorithms use sophisticated methods to avoid over fitting.

- Generally require serious hardware (GPU) to train in reasonable time.

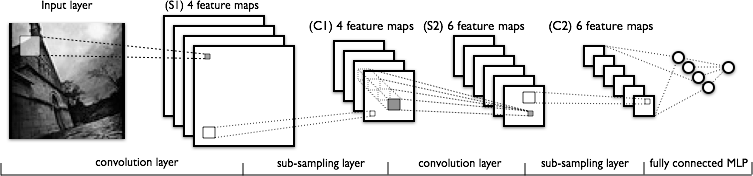

Convolutional Neural Networks

(source: http://deeplearning.net/tutorial/lenet.html#lenet)

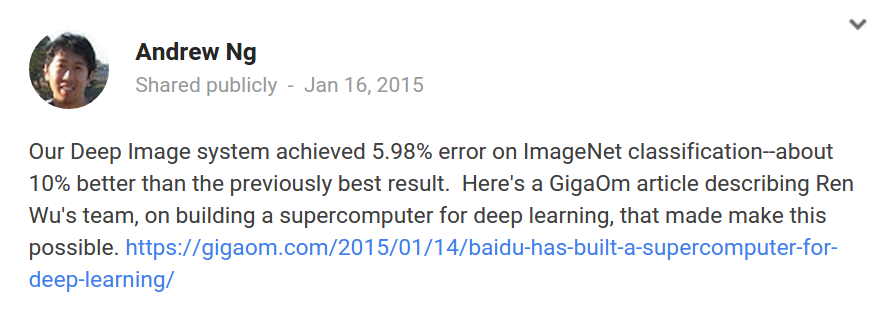

Records Were Made to Be Broken

(source: https://plus.google.com/113710395888978478005/posts/Gx3w9E14k2P)

(source: http://danielnouri.org/notes/2014/12/17/using-convolutional-neural-nets-to-detect-facial-keypoints-tutorial/)

Validation

What are the consequences of error in your predictions? Is safety paramount?

- Divide the available data into three sets:

- Training set for base model training.

- Cross validation set for model selection and parameter tuning.

- Test set for estimating final accuracy.

For measurements of skillful prediction, "...wait for confirmatory, never-observed-before data."

“In the course of my work in the Potti lab, I discovered what I perceived to be problems in the predictor models that made it difficult for me to continue working in that environment,” he said in an email to The Cancer Letter.

(source: http://www.cancerletter.com/articles/20150109_1)

Good Software Engineering Practice

(source: http://research.google.com/pubs/pub43146.html)

Concluding Thoughts

- Most prediction problems can be described using a common framework of definitions. In that sense, they are not mysterious. But the latest deep learning models are bordering on magical!

- Seek the simplest possible model that attains the required level of prediction performance. Keep a close eye on your "technical debt" credit card bills.

- Validate, validate, and then keep validating.

- Choose your prediction problems wisely.

- The existence of a large data set does not necessarily imply that a valuable model is lurking inside.

- Chances are you don't yet have the data sets for the the most valuable problems. Getting that data will require concerted investment.

Thank You!

Jed Ludlow

jedludlow.com